Process Pages

What is a Language Model?

KEY WORDS:

language models, talk, work, people, text, AI, paper, data, synthetic, bias, thought, machines, case, wrote, world, question, meaning, wordsJust as I described in the previous reflection, the possibilities of a lottery ticket seem endless. Similarly, the possibilities and applications of large language models seem endless, too. But are they?

I've summarized a lecture from Emily Bender to give an introduction to language models. This will help you understand the basis for this grant project and why I've chosen to focus on this area of AI.

“Words change depending on who speaks them”

Maggie Nelson (2015)

But there is no “who” behind Chat GPT.

Emily Bender (2023)

ChatGP-why: When, if ever, is synthetic text safe,

appropriate, and desirable?

By: Emily Bender

From: August 8, 2023

Description (taken from YouTube description): “Ever since OpenAI released ChatGPT, the internet has been awash in synthetic text, with suggested applications including robo-lawyers, robo-therapists, and robo-journalists. I will overview how language models work and why they can seem to be using language meaningfully-despite only modeling the distribution of word forms. This leads into a discussion of the risks we identified in the Stochastic Parrots paper (Bender, Gebru et al 2021) and how they are playing out in the era of ChatGPT. Finally, I will explore what must hold for an appropriate use case for text synthesis.”

High-level CEREMONY Translation:

“synthetic text”- text generated by a machine, potentially using previous text that a human wrote/spoke/strung together

“despite only modeling the distribution of word forms”- this is described more fully below, but synthetic language models do not make meaning, they are like “word calculators” - they spit out calculated text based on equations (algorithms), not based on context, meaning, or intention

context, meaning, intention - exists in the relational, human, outside world - does not exist inside of a language model (could be argued that context, meaning, intention do exist in machines, but only in thin ways)

“text synthesis”- the action machines take when generating text responses - what I’m trying to build, a language model that can synthesize a collection of text in various ways

High-level CEREMONY Translation:

“synthetic text”- text generated by a machine, potentially using previous text that a human wrote/spoke/strung together

“despite only modeling the distribution of word forms”- this is described more fully below, but synthetic language models do not make meaning, they are like “word calculators” - they spit out calculated text based on equations (algorithms), not based on context, meaning, or intention

context, meaning, intention - exists in the relational, human, outside world - does not exist inside of a language model (could be argued that context, meaning, intention do exist in machines, but only in thin ways)

“text synthesis”- the action machines take when generating text responses - what I’m trying to build, a language model that can synthesize a collection of text in various ways

What is a language model?

- better term is a “corpus model” (Veres 2022)

What is it good for?

- spell checking

- transcription

- machine translation

- simplify text entry (think T9 - not having to click every number however many times to get to the letter you want)

What is a “neural language model”?

- neural nets are NOT artificial brains

An “aura of magic” has developed around these terms because the words we use to describe them sound so similar to biology.

- given what has come so far, what is likely to come next? How do words correlate to one another?

- a neural net is trained with “back propagation” (what has come before will inform what comes next)

What is a large language model?

- how many words are in the training data?

- image below shows the size of language models and their growth over the past few years (tokens = words)

- image from Scale: Guide to Large Language Models

![]()

What are large language models good for?

- transcription, machine translation

- summarization

- sentiment analysis (is this a positive or negative statement and about what)

![]()

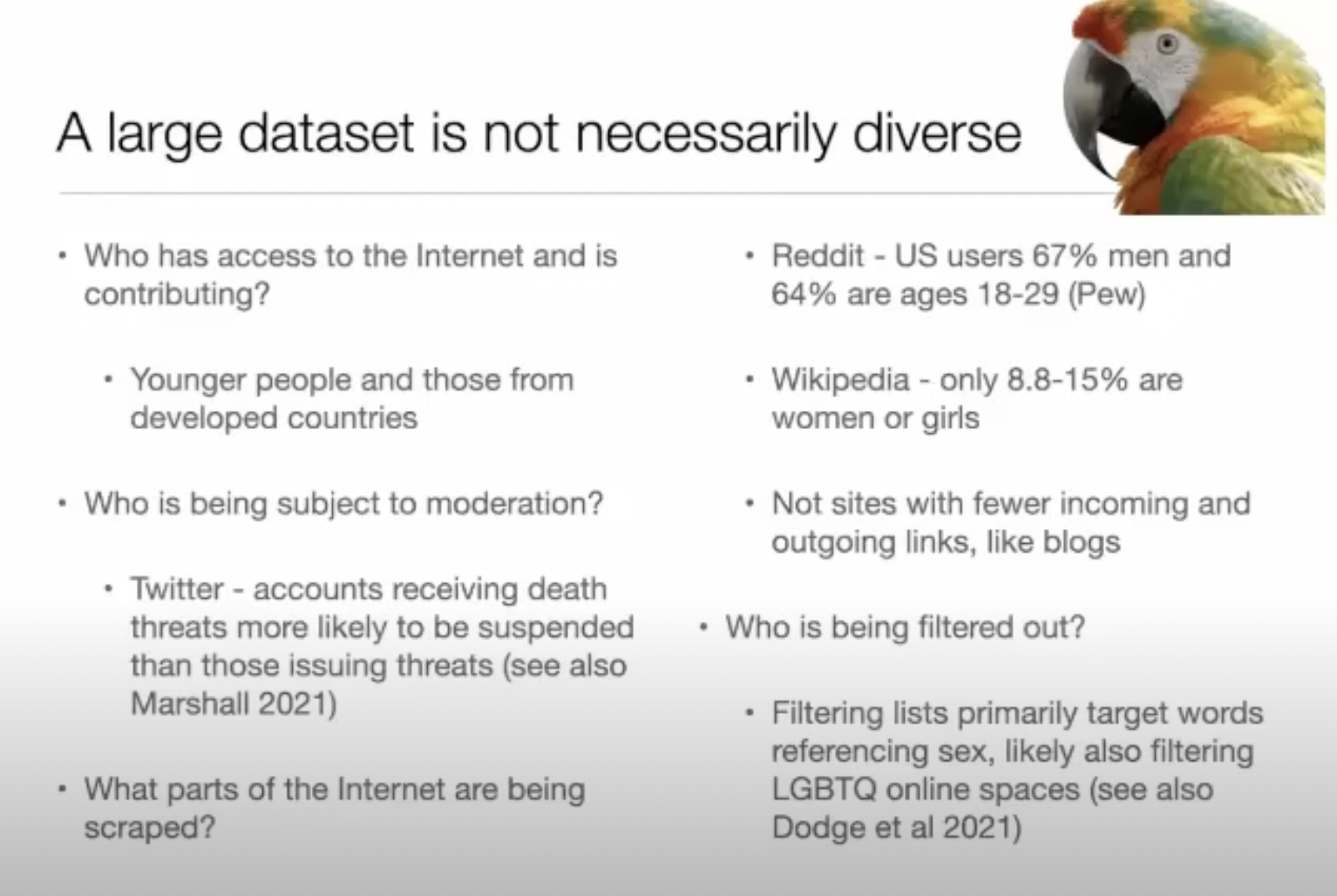

- LLMs can lead to a more hegemonic view of the world based on how their data is collected

- takes patterns of the past to predict patterns in the future

Do we want the patterns of the past to dictate our collective future?

What is generative AI?

- takes these systems models that are meant for classification and ranking and turns them inside out

- produces something that “looks plausible and not artifical”

- “generative AI” is not AI and AGI (these are terms that lack good definitions)

What is generative AI good for?

- to think about this question critically, we have to think about form and meaning

When a user is fluent in a language, it is easy to conflate form and meaning.

- meaning is something EXTERNAL to language

Machines “in a very thin way” understand form and some meaning.

![]()

An example: BERTology

- question: How do babies learn language?

- discovery: found that interaction and joint attention is key (a baby in front of a TV or radio will not yield the learning results that a baby interacting with another person will)

- maybe the baby will learn words, but they will not learn the context, intention, and meaning of the words

- what’s missing when we sit in front of a TV or radio? interaction/meaning making/relationships

How do we apply this learning to generative AI?

- meaning is not found in form alone

- therefore, generative AI machines do not KNOW what any of the words they generate MEAN (at least, not deeply)

- The machine only knows the likelihood of a word coming next. The user makes meaning from the response.

An answer ChatGPT gives doesn’t inherently make sense, the user makes sense of it.

Octopus Example (O is the Chatbot, A is a human)

![]()

![]()

Meaning making requires access to an outside world where communicative intents live.

Stochastic Parrot Article

On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?

Origin Story:

- Timnit Gebru (working @ Google) and Emily Bender decided to write a paper based on gaps in research

- Google approved the paper for publication but in peer review process Google changed their mind

- said that Google employees had to remove their names from the publication or remove the paper

- 2 co-authors were fired and kept their names on the paper

- 3 co-authors removed their names

What the article explores: Are ever-larger language models inevitable/necessary?

- What are the financial and environmental costs to using LLMs? And to whom are the costs impacting the most?

- Could the energy going into LLMs be better used elsewhere?

- Marginalized communities are not seeing benefits from LLMs

- If there is lacking documentation of data, the potential for harm without recourse increases

Are we over-excited about something that isn’t really that exciting?

- We can’t help but make sense of language we are competent in, we tend to automatically imagine a mind behind language we see

- danger in treating LLMs as everything machines (the idea they have answers for everything)

LLMs have text that takes the FORM of a useful answer - it’s up to the user to decide if that answer is useful or meaningful. Humans play a crutial role as meaning makers.

Dangers with synthetic text

- arguably they are more dangerous as they are trained because we will become more trustful of them (trusting of something lacking meaning)

- the more we trust synthetic text without participation or evalutaion, the more we erase ourselves from the role we play

- search results are also impacted by advertising interests: there is a risk that we start to trust search results as accurate representations of the world or accurate representations of how people talk about the world and neither are true

A danger of synthetic text its potential to limit our access to information and erase the human as a vital sense-maker.

![]()

![]()

![]()

Links and learning recommendations from Emily Bender:

- better term is a “corpus model” (Veres 2022)

- given a collection of text (a corpus) representing a language, how likely is a given string to appear?

What is it good for?

- spell checking

- transcription

- machine translation

- simplify text entry (think T9 - not having to click every number however many times to get to the letter you want)

What is a “neural language model”?

- neural nets are NOT artificial brains

An “aura of magic” has developed around these terms because the words we use to describe them sound so similar to biology.

- given what has come so far, what is likely to come next? How do words correlate to one another?

- a neural net is trained with “back propagation” (what has come before will inform what comes next)

What is a large language model?

- how many words are in the training data?

- image below shows the size of language models and their growth over the past few years (tokens = words)

- image from Scale: Guide to Large Language Models

What are large language models good for?

- transcription, machine translation

- summarization

- sentiment analysis (is this a positive or negative statement and about what)

- LLMs can lead to a more hegemonic view of the world based on how their data is collected

- takes patterns of the past to predict patterns in the future

Do we want the patterns of the past to dictate our collective future?

What is generative AI?

- takes these systems models that are meant for classification and ranking and turns them inside out

- produces something that “looks plausible and not artifical”

- “generative AI” is not AI and AGI (these are terms that lack good definitions)

What is generative AI good for?

- to think about this question critically, we have to think about form and meaning

When a user is fluent in a language, it is easy to conflate form and meaning.

- meaning is something EXTERNAL to language

Machines “in a very thin way” understand form and some meaning.

An example: BERTology

- question: How do babies learn language?

- discovery: found that interaction and joint attention is key (a baby in front of a TV or radio will not yield the learning results that a baby interacting with another person will)

- maybe the baby will learn words, but they will not learn the context, intention, and meaning of the words

- what’s missing when we sit in front of a TV or radio? interaction/meaning making/relationships

How do we apply this learning to generative AI?

- meaning is not found in form alone

- therefore, generative AI machines do not KNOW what any of the words they generate MEAN (at least, not deeply)

- The machine only knows the likelihood of a word coming next. The user makes meaning from the response.

An answer ChatGPT gives doesn’t inherently make sense, the user makes sense of it.

Octopus Example (O is the Chatbot, A is a human)

Meaning making requires access to an outside world where communicative intents live.

Stochastic Parrot Article

On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?

Origin Story:

- Timnit Gebru (working @ Google) and Emily Bender decided to write a paper based on gaps in research

- Google approved the paper for publication but in peer review process Google changed their mind

- said that Google employees had to remove their names from the publication or remove the paper

- 2 co-authors were fired and kept their names on the paper

- 3 co-authors removed their names

What the article explores: Are ever-larger language models inevitable/necessary?

- What are the financial and environmental costs to using LLMs? And to whom are the costs impacting the most?

- Could the energy going into LLMs be better used elsewhere?

- Marginalized communities are not seeing benefits from LLMs

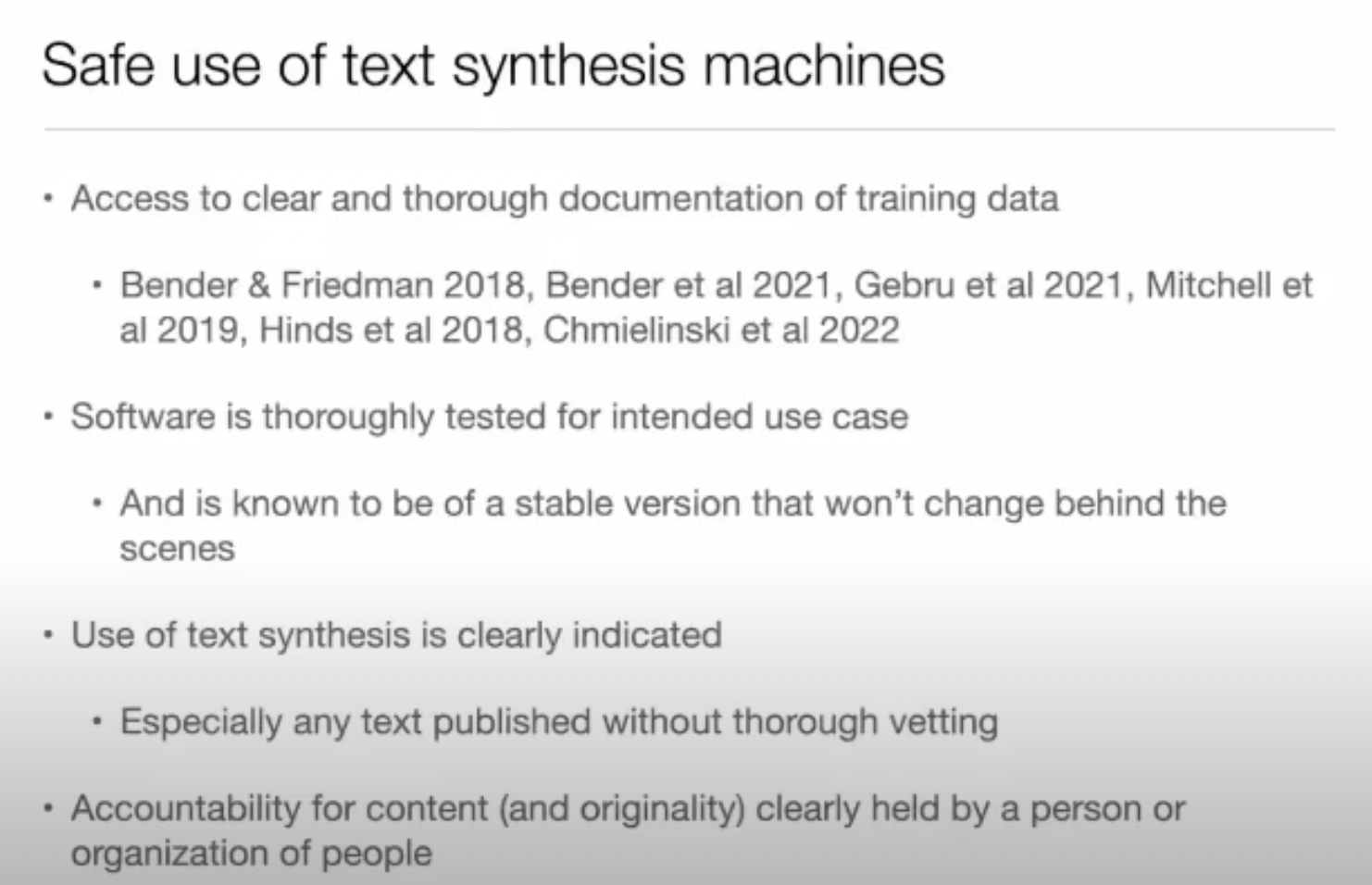

How big is too big? Too big to document is too big to use.

- If there is lacking documentation of data, the potential for harm without recourse increases

Are we over-excited about something that isn’t really that exciting?

- We can’t help but make sense of language we are competent in, we tend to automatically imagine a mind behind language we see

- danger in treating LLMs as everything machines (the idea they have answers for everything)

LLMs have text that takes the FORM of a useful answer - it’s up to the user to decide if that answer is useful or meaningful. Humans play a crutial role as meaning makers.

Dangers with synthetic text

- arguably they are more dangerous as they are trained because we will become more trustful of them (trusting of something lacking meaning)

- the more we trust synthetic text without participation or evalutaion, the more we erase ourselves from the role we play

- search results are also impacted by advertising interests: there is a risk that we start to trust search results as accurate representations of the world or accurate representations of how people talk about the world and neither are true

A danger of synthetic text its potential to limit our access to information and erase the human as a vital sense-maker.

Links and learning recommendations from Emily Bender:

- Podcast: Radical AI

- also have a curriculum based on their podcast episodes

- Dr. Casey Fiesler TikTok: @professorcasey

- Podcast: Tech won't save us

- Podcast: Mystery AI Hype Theatre 3000

- Good for understanding hype and cutting through it

CEREMONY GOALS

- Use Bender’s “criteria for a good use case” to build model

- Promote user as the active, necessary sense-maker of data

- Content of language model is documented with data statements

- Interrogate bias and think about function of model (I will be held accountable for language included in model and must care for stories in the conversation archive)

BRIEF DESCRIPTION OF THE PROJECT

Ceremony is a web platform that hosts an evolving catalogue of community conversations. The content is designed to bring together works by both professional and emerging artists. This project will build a live language model with the catalogue, allowing readers to participate in the creation of art via an interface designed with equity and accessibility in mind. The project aims to democratize digital tools, increase digital and data literacy through discussion, and pave the way for other creative and accessible AI applications within the artistic community.